|

Jay Whang I'm a research scientist at Google DeepMind working on Gemini. I received my PhD at UT Austin under Prof. Alex Dimakis. Prior to that, I studied CS/math at USC and Stanford, and also spent a few years at YouTube training classifiers and building backend infrastructure. CV | Google Scholar | Twitter | LinkedIn | GitHub |

|

ResearchMy research interests lie broadly in deep generative modeling with the goal of enabling it to work at scale. In particular, I’m interested in using likelihood-based models to perform useful downstream tasks such as image/video generation, inverse problems and compression. |

Publication |

|

Imagen Video: High Definition Video Generation with Diffusion Models

Jonathan Ho*, William Chan*, Chitwan Saharia*, Jay Whang*, Ruiqi Gao, Alexey Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, Tim Salimans* arXiv 2022 project page A cascade of 3D diffusion models for generating high-definition videos from text prompts through a sequence of spatial and temporal upscaling. |

|

|

Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding

Chitwan Saharia*, William Chan*, Saurabh Saxena†, Lala Li†, Jay Whang†, Emily Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S. Sara Mahdavi, Rapha Gontijo Lopes, Tim Salimans, Jonathan Ho†, David J. Fleet†, Mohammad Norouzi* NeurIPS 2022 (Outstanding Paper) project page A diffusion-based text-to-image model that can generate photorealistic images with deep language understanding. |

|

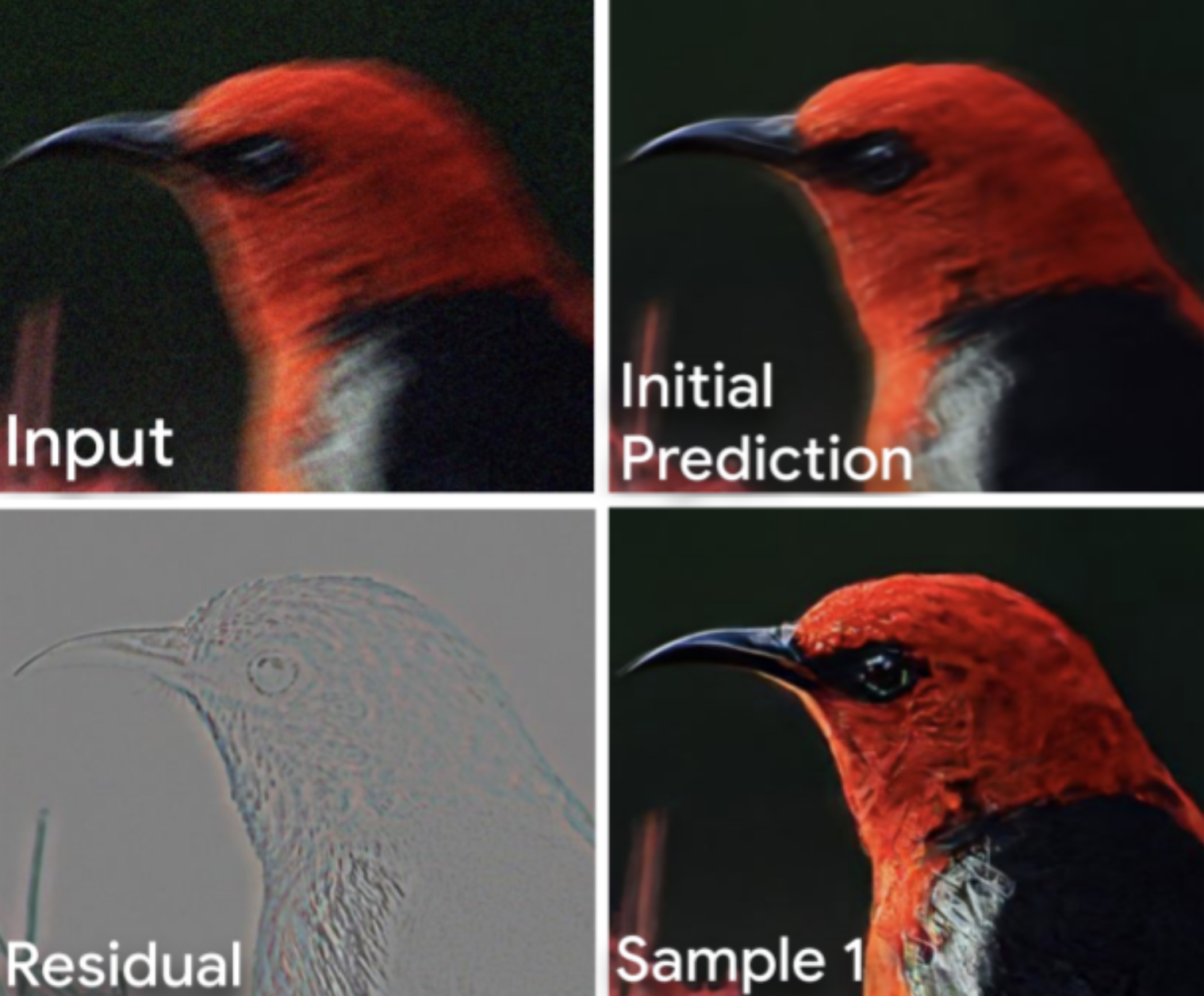

Deblurring via Stochastic Refinement

Jay Whang, Mauricio Delbracio, Hossein Talebi, Chitwan Saharia*, Alexandros G. Dimakis, Peyman Milanfar CVPR 2022 (Oral Presentation) A stochastic image deblurring model that consists of a deterministic predictor and a lightweight diffusion head. |

|

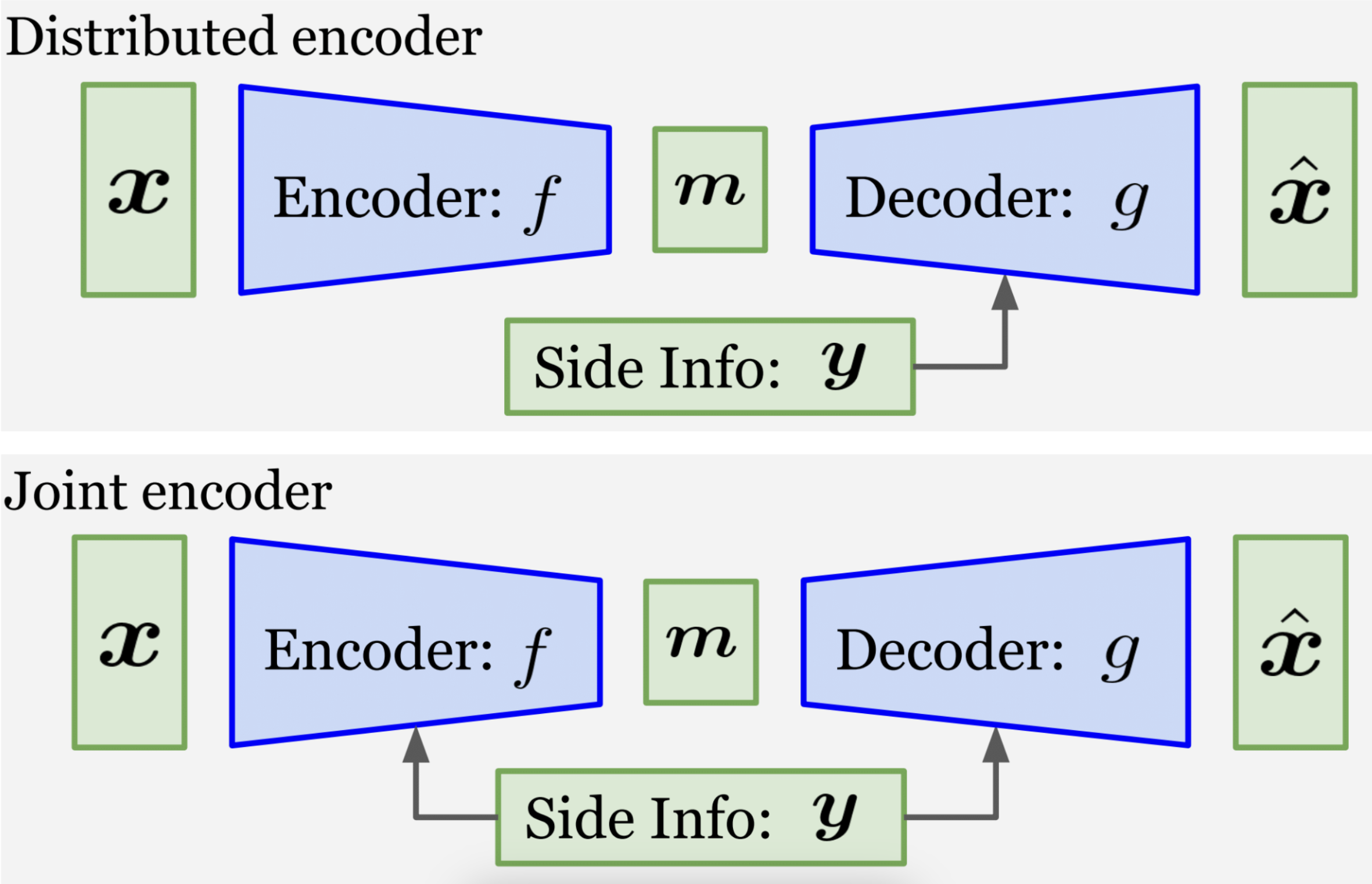

Neural Distributed Source Coding

Jay Whang*, Alliot Nagle*, Anish Acharya, Hyeji Kim, Alexandros G. Dimakis JSAIT 2024 code A learned lossy compressor with side information that is trained end-to-end that works as a practical instantiation of Wyner-Ziv coding. |

|

Composing Normalizing Flows for Inverse Problems

Jay Whang, Erik M. Lindgren, Alexandros G. Dimakis ICML 2021 UAI 2021 Workshop on Tractable Probabilistic Modeling (Best Paper Award) A technique to distributionally solve inverse problems with a pretrained normalizing flow model as a prior by composing it with another learned flow model. |

|

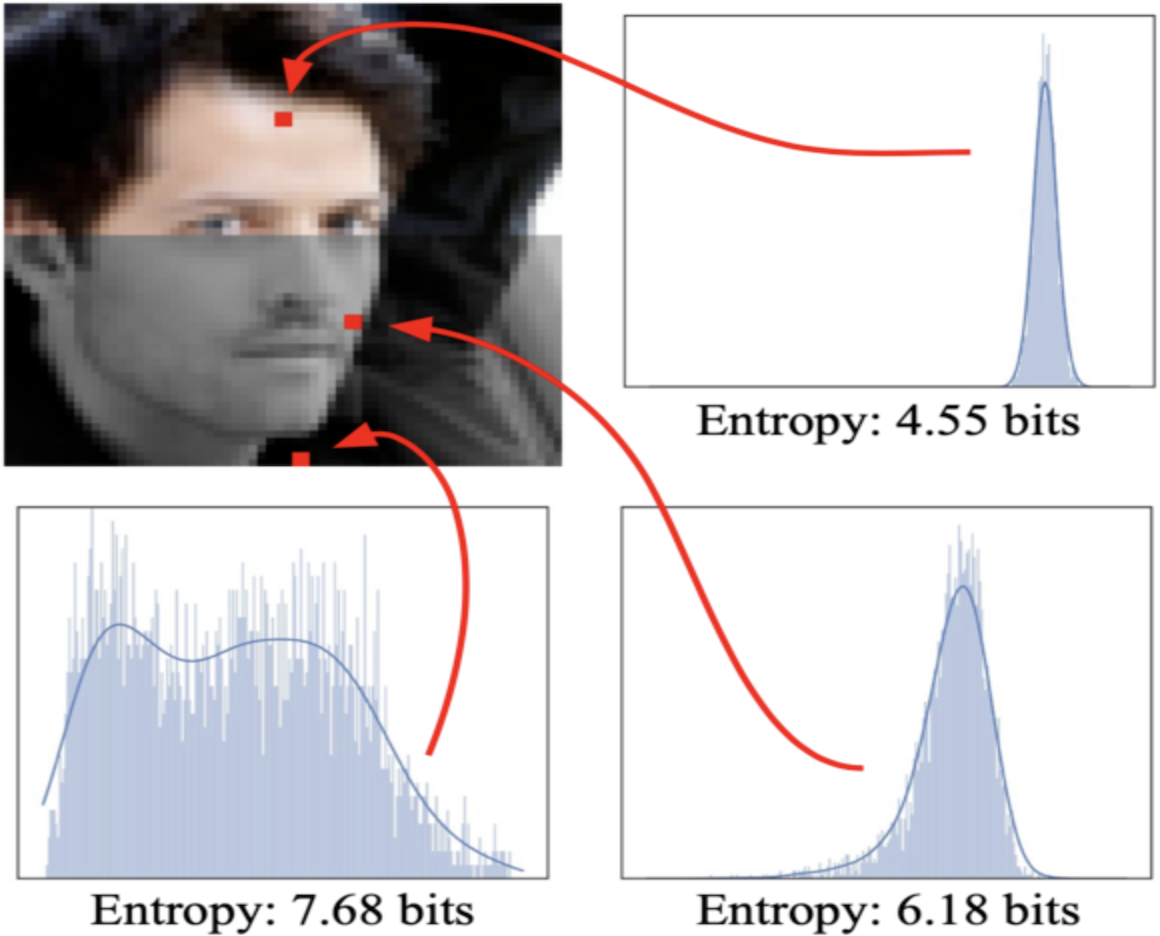

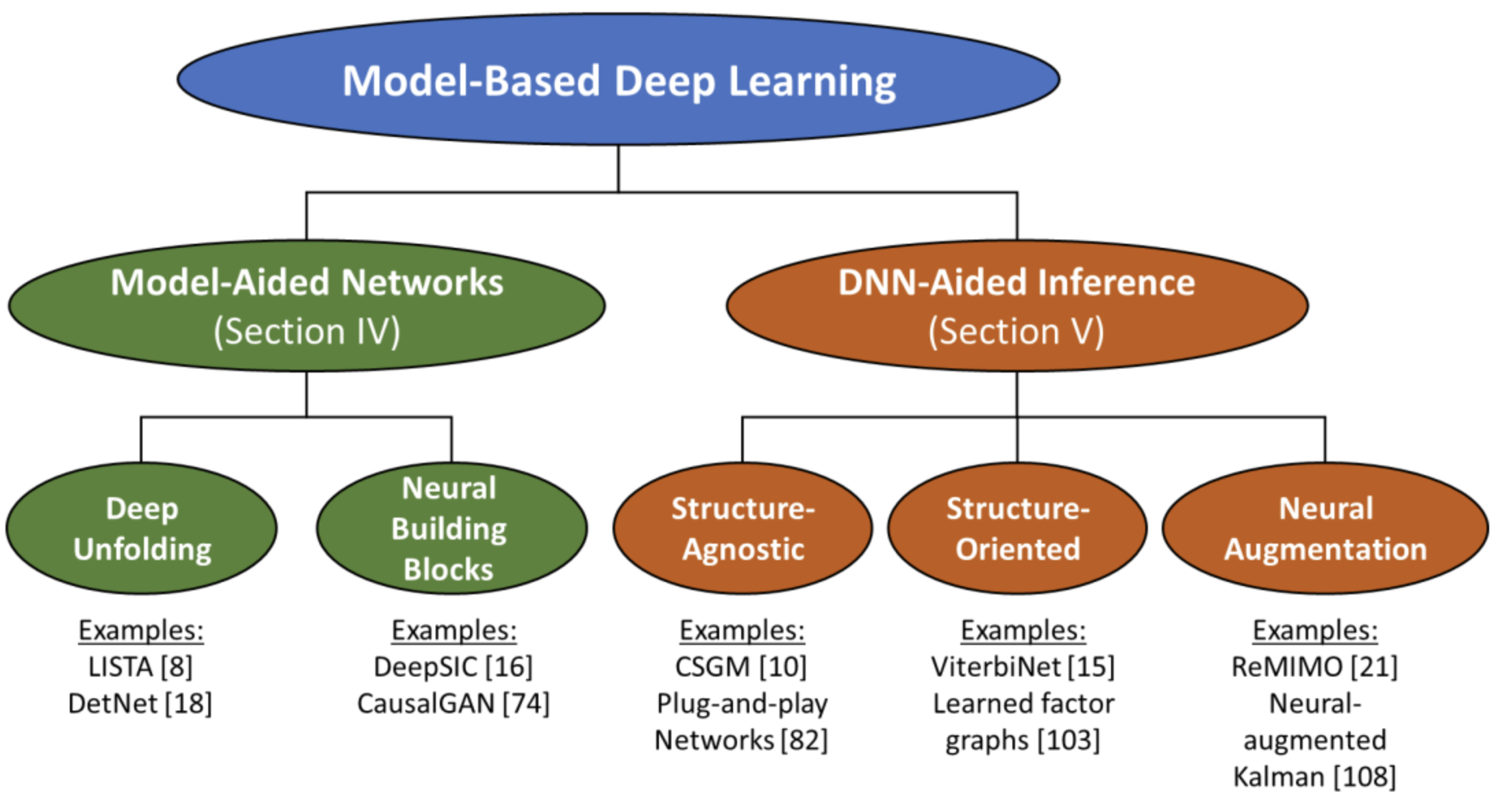

Model-Based Deep Learning

Nir Shlezinger, Jay Whang, Yonina Eldar, Alexandros G. Dimakis arXiv 2021 Short version at IEEE Data Science & Learning Workshop 2021 (Audience Choice Award) A comprehensive review of hybrid methods that combine deep learning and classical modeling techniques for signal processing problems. |

|

Solving Inverse Problems with a Flow-based Noise Model

Jay Whang, Qi Lei, Alexandros G. Dimakis ICML 2021 A technique for handling complex noise in inverse problems through a dedicated model with a deep generative prior. |

|

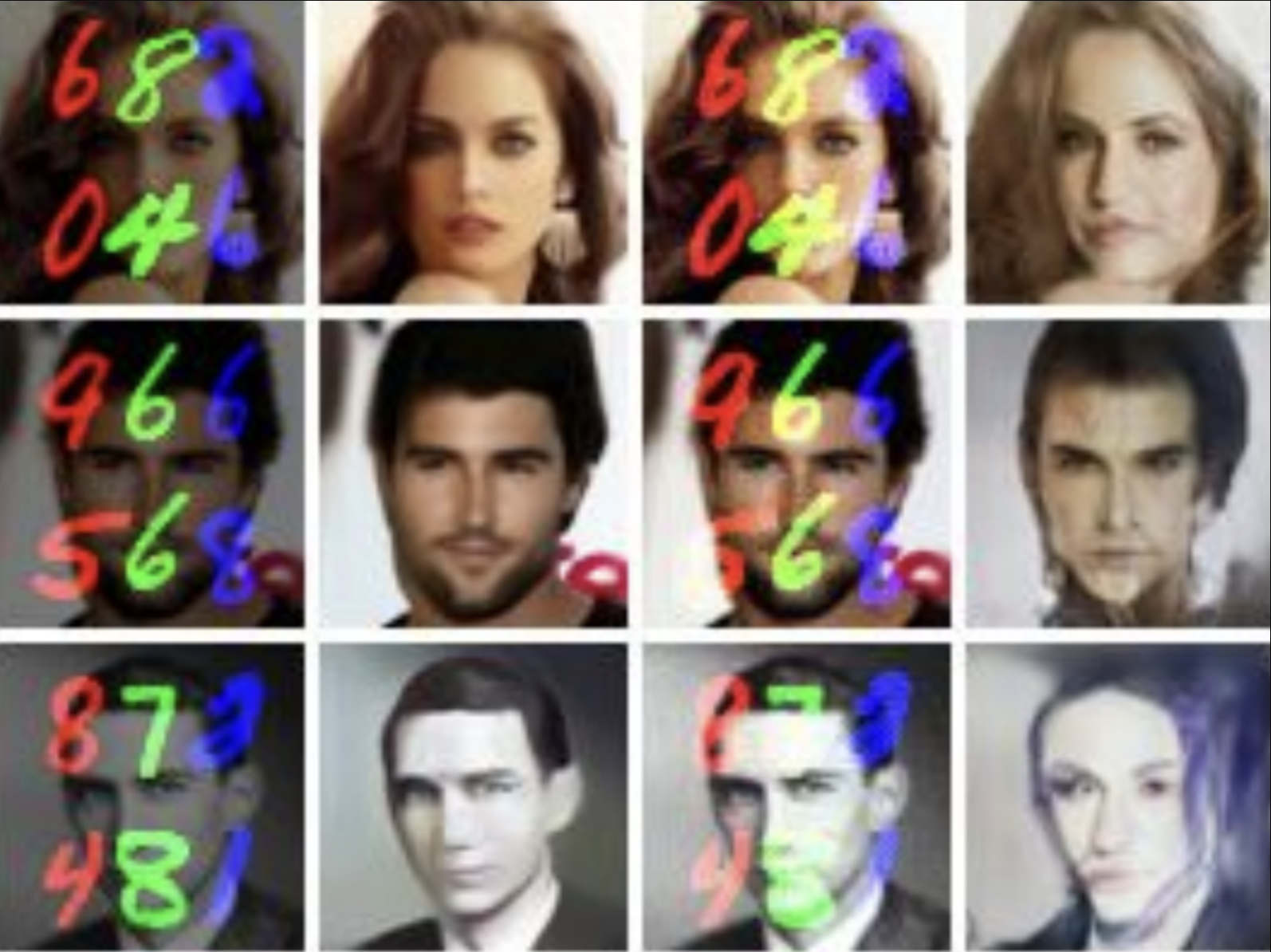

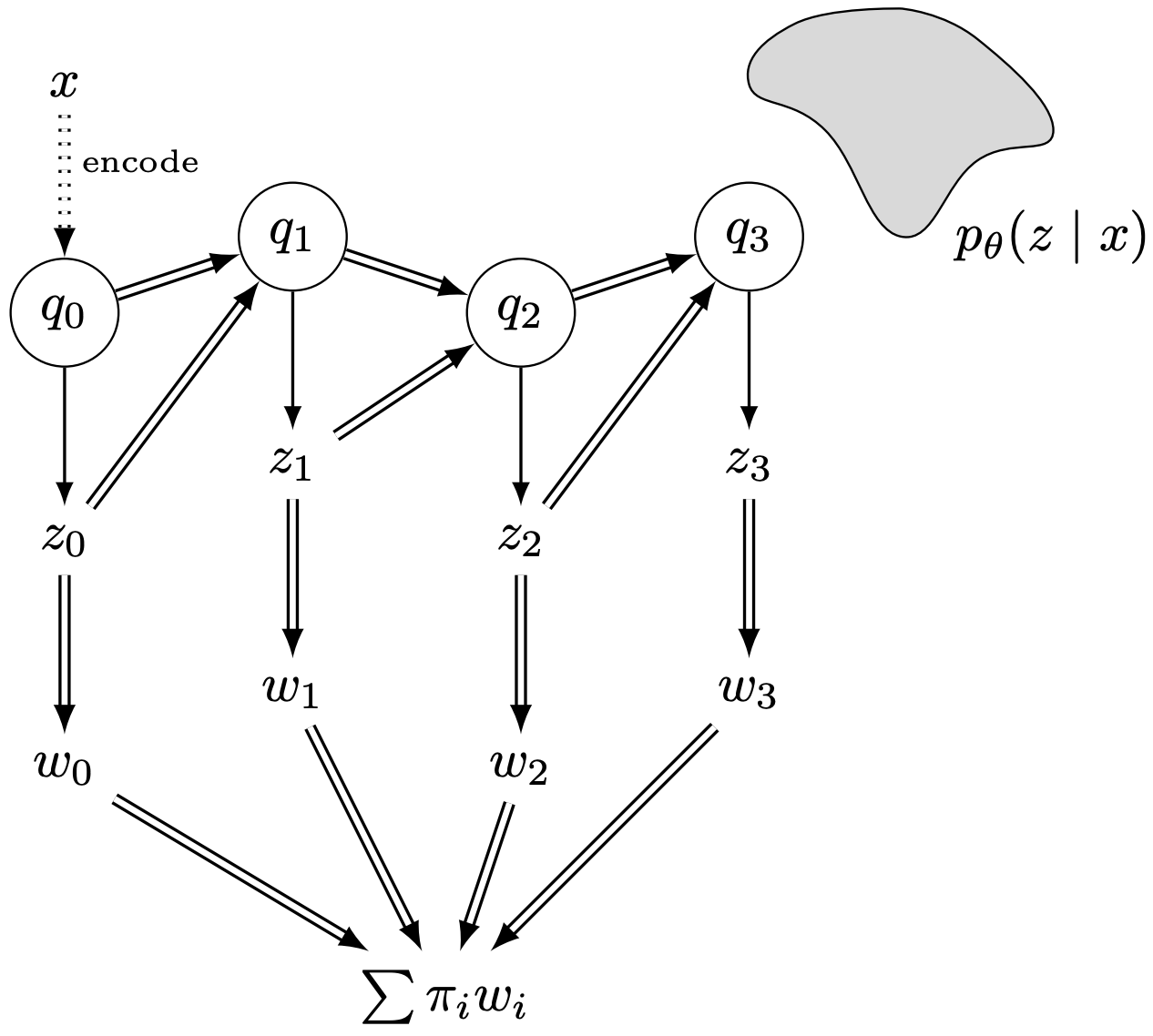

Training Variational Autoencoders with Buffered Stochastic Variational Inference

Rui Shu, Hung Bui, Jay Whang, Stefano Ermon AISTATS 2019 An technique for improving stochastic variational inference by reusing intermediate variational proposal distributions. |

|

Fast Exploration with Simplified Models and Approximately Optimistic Planning in Model Based Reinforcement Learning

Ramtin Keramati*, Jay Whang*, Patrick Cho*, Emma Brunskill arXiv 2018 Short version at ICML 2018 Workshop on Exploration in RL. A model-based RL technique that improves sample efficiency in hard exploration environments through optimistic exploration and fast learning via object representation. |

|

Template credits: Jon Barron, Changan Chen |